Ellas Investigan | María José Apolo: Imágenes musicales

Mayo, 2023.- María José Apolo, ex estudiante IMFD y titulada de Ingeniería Civil Informática en la Universidad Técnica Federico Santa María, trabaja en el campo de la inteligencia artificial creativa, investigando cómo se realiza la transferencia de estilo desde la música hacia las imágenes, por medio de deep learning.

En la investigación “Bimodal style transference from musical composition to image using deep generative models”, realizada junto con Marcelo Mendoza, investigador IMFD y académico de Computación de la Pontificia Universidad Católica (DCC UC), estudian la transferencia de estilo de canciones a portadas de los álbumes, es decir, el hecho de que los distintos estilos musicales usan ciertas imágenes como portadas representativas.

La meta de la investigación es ver si es posible que un sistema pueda crear de manera automática –usando la música como información– una imagen que sea adecuada, por ejemplo, como carátula para un disco.

CADA CANCIÓN CON SU IMAGEN

Para lograrlo, lo primero que hicieron fue alimentar a un modelo con datos: “El set de datos que generé es un dataset multimodal que tenía cada canción –representada como espectrograma– asociada a su carátula de álbum. El modelo fue alimentado con una cantidad de pares (canción, portada) de aproximadamente 20.000 datos”, explica María José Apolo.

¿Qué significa que las canciones hayan sido procesadas como espectrogramas? Un espectrograma es una representación visual del sonido, que va mostrando las variaciones en intensidad y frecuencia en el tiempo que dura un tema. Es una versión mucho más avanzada y compleja de lo que vemos, por ejemplo, cuando usamos el micrófono del computador y nos muestra –en una barra horizontal– el volumen de nuestra voz (intensidad) y se activa sólo cuando hablamos (frecuencia).

Los espectrogramas de canciones parecidas tenderán a compartir características medibles similares y un modelo de IA puede analizarlas para identificar las que son parecidas o las que están lejanas en el espectro musical.

Después de esto, los investigadores comenzaron a ingresar consultas al modelo: al preguntarle por una canción específica, que el sistema tiene identificada a través de un espectrograma, el modelo mostraba lo que consideraba eran las 100 portadas más parecidas o cercanas a la canción consultada. María José comenta que, en su mayoría, las portadas que el sistema arrojaba eran del mismo género de la canción consultada o de géneros similares.

CREANDO CARÁTULAS CON IA

En una segunda fase, la investigadora comenzó a utilizar esas 100 carátulas que el sistema entregaba para una canción consultada, para entrenar un modelo generativo de imágenes, algo como los sistemas Dall-e o Stable Diffusion, que extrajo las características comunes de esas portadas y comenzó a crear otras nuevas.

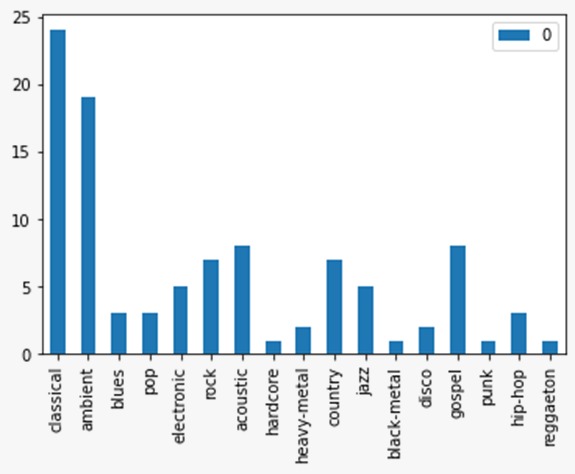

Esto, con el objetivo de que, al ingresar una canción de un determinado género, el sistema generativo crease una imagen completamente nueva que efectivamente correspondiera al tema. Esto se hizo con canciones de 10 géneros diferentes, y se vio que el modelo generativo, al generar nuevas carátulas, obtuvo una precisión de 20,89 en una escala de 0 a 250, donde es más preciso o similar lo que está más cerca de cero.

«Elegí este tema de investigación porque me parece muy interesante cómo, desde el campo del aprendizaje profundo, se puede lograr modelar y dar una interpretación a una interrogante abstracta y subjetiva, como lo es la transferencia de estilo desde una obra musical hacia una imagen, donde se debe hacer una traducción de atributos multimodales que están condicionados por los propios conceptos de los individuos y su trasfondo biológico y social», explica María José Apolo.

La esperanza futura de la investigadora es generar una sistema que permita a artistas emergentes que no cuentan con suficientes recursos, ingresar su canción original a una plataforma y obtener una carátula de disco que sea original y que coincida con el tema del autor.