Investigadores del IMFD presentan trabajo que analiza arquitectura de la red neuronal «Transformer»

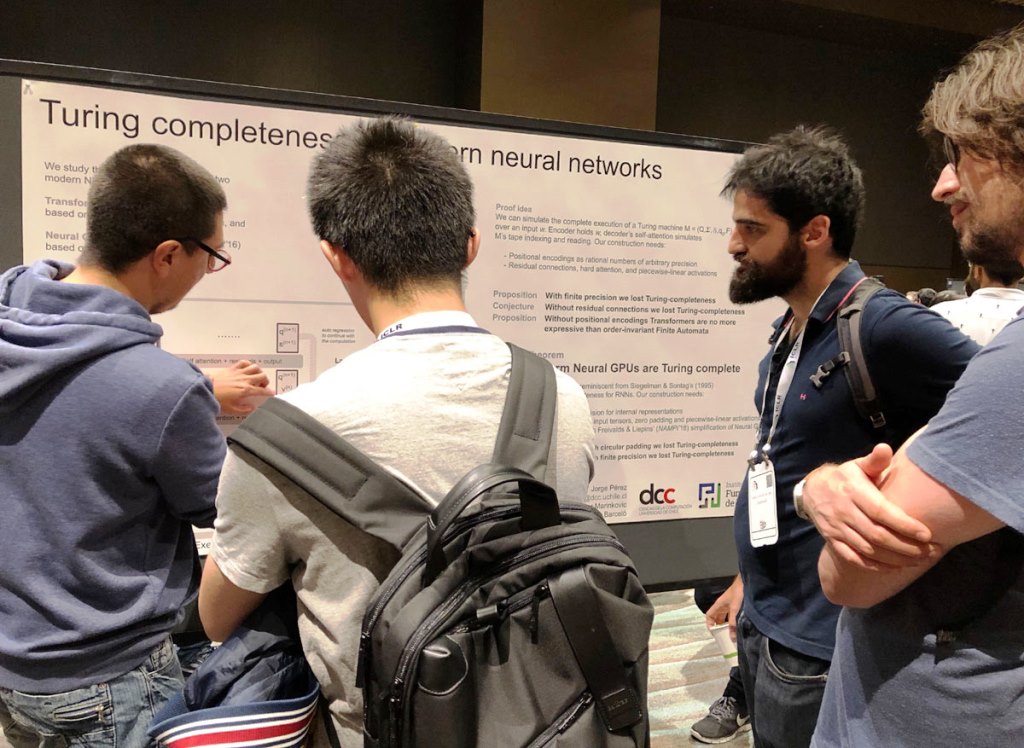

«On the Turing Completeness of Modern Neural Network Architectures» se titula la investigación que el académico del Departamento de Ciencias de la Computación (DCC) de la U. de Chile e investigador del IMFD, Jorge Pérez, presentó en la International Conference on Learning Representation (ICLR 2019), que se llevó a cabo en la ciudad de Nueva Orleans, Estados Unidos. Se trata de la conferencia internacional más importante en el área de Deep Learning.

Este trabajo fue realizado en conjunto entre Jorge Pérez, Pablo Barceló –también académico del DCC y director alterno del Instituto Milenio Fundamentos de los Datos- y el alumno de pregrado de Ciencias de la Computación, Javier Marinkovic.

Jorge Pérez explicó que las redes neuronales profundas se usan hoy para tomar decisiones en muchos rubros como clasificación de imágenes y texto, traducción automática y recomendaciones de contenido. Sin embargo, afirmó que el poder computacional de estas redes no es cien por ciento entendido desde un punto de vista teórico. “En nuestro paper analizamos el poder computacional de una de las arquitecturas de red neuronal profunda más populares en la actualidad, llamada Transformer. Esta arquitectura es la que usa Google, por ejemplo, para su sistema de traducción automática, pero ha sido usada para muchas otras tareas, incluso generar música” (una pieza de música creada por esta red la puedes encontrar acá: https://bit.ly/2HlOoV2).

En esta investigación, los académicos demostraron que el poder computacional del Transformer es tan alto como es posible para una máquina automática, noción que se conoce como Turing Completeness. “Esto quiere decir que la arquitectura usada por Google para traducir o generar música tiene el poder de implementar cualquier algoritmo posible. Hasta antes de nuestro resultado no se sabía con claridad cuál era el límite de poder de esta red neuronal. El artículo también muestra qué partes de la arquitectura de esta red neuronal la hacen tan poderosa y que si quitáramos ciertas partes efectivamente perdería poder”, concluyó Pérez.

Puedes encontrar un resumen del paper (en inglés) en https://bit.ly/30q2E6q

Fuente: Comunicaciones del DCC.