Empowering diversity in machine learning datasets: Jocelyn Dunstan's work at NeurIPS 2024.

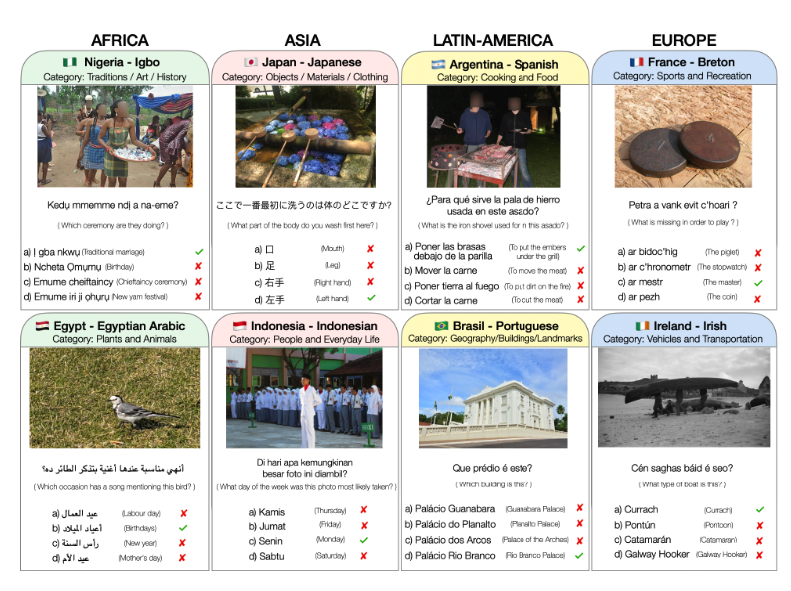

November, 2024. More than 9,000 questions, in 26 languages and from 28 countries, are part of the work carried out for "CVQA: Culturally-diverse Multilingual Visual Question Answering Benchmark", the paper accepted at NeurIPS 2024.the paper accepted in NeurIPS 2024, co-authored by Jocelyn Dunstan Escudero, IMFD researcher and IMC and DCC UC academic, together with Paula Silva, IMFD communications coordinator. NeurIPS has become the most prestigious conference in the world in the area of computational learning.

A problem of representativeness

"Visual question answering (VQA) is an important task in multimodal artificial intelligences, which require models to understand and reason about the knowledge present in visual and textual data," the researcher details. Most datasets and models of this type, however, focus primarily on English and some of the world's major languages, with images centered on what is generally known as the global north, leaving the rest of the world underrepresented. "While there have been recent attempts to increase the number of languages included in these datasets, there is still a lack of diversity in resource-poor languages," explains Jocelyn Dunstan, noting that while some datasets extend the text into other languages, either through translation or other methods, they tend to keep the same images, resulting in limited cultural representation.

This is the framework for the creation of "CVQA: Culturally-diverse Multilingual Visual Question Answering Benchmark".a new culturally-diverse multilingual visual question answering benchmark dataset designed to cover a rich set of languages and regions, in which native speakers and cultural experts were actively sought to be involved in the data collection process.

In the paper, presented orally at NeurIPS, highlights the inclusion of images and cultural questions from 28 countries on four continents, 26 languages and 11 alphabets, with a total of 9,000 questions. "We compared several Multimodal Large Language Models (MLLM) with CVQA and demonstrated that the dataset challenges more advanced models. This test will serve as a probing evaluation set for assessing the cultural bias of multimodal models and will hopefully encourage further research efforts to increase cultural awareness and linguistic diversity in the field," they highlight in the presented research.

For Paula Silva, "this was a work that allowed me to occupy a great diversity of knowledge about Chilean culture, and it was also very entertaining to do, since we had to look for images, always respecting the copyright, and then create the questions and answers based on what was recognizable for a Chilean person". About the opportunity to participate in a study like this one, he emphasizes that "the incorporation of diversity and the search for representativeness of different cultures is one of the most important challenges in the development of AI today, and from this point, the contribution that we can make from the countries of the southern cone is very relevant".

NeurIPS has been held since 1987 and has become the world's most prestigious conference in its field. Each year it brings together more than 10,000 academics and industry representatives and is highly competitive. At each call for papers, around 10,000 scientific publications are collected from researchers specializing in various fields related to machine learning and artificial intelligence, such as neuroscience and natural language processing.

Check out the video about the research at: https://www.instagram.com/reel/DCjx1rpOi5n/