Ellas Investigan : María José Apolo: Musical Images

María José Apolo, former IMFD student and graduate of Computer Engineering at the Universidad Técnica Federico Santa María, works in the field of creative artificial intelligence, investigating how the transfer of style from music to images is carried out by means of deep learning.

In the research "Bimodal style transference from musical composition to image using deep generative models", carried out together with Marcelo Mendoza, IMFD researcher and Computer Science academic at Pontificia Universidad Católica (DCC UC), they study the transference of style from songs to album covers, that is, the fact that different musical styles use certain images as representative covers.

The goal of the research is to see if it is possible for a system to automatically create - using music as information - an image that is suitable, for example, as album cover art.

EACH SONG WITH ITS IMAGE

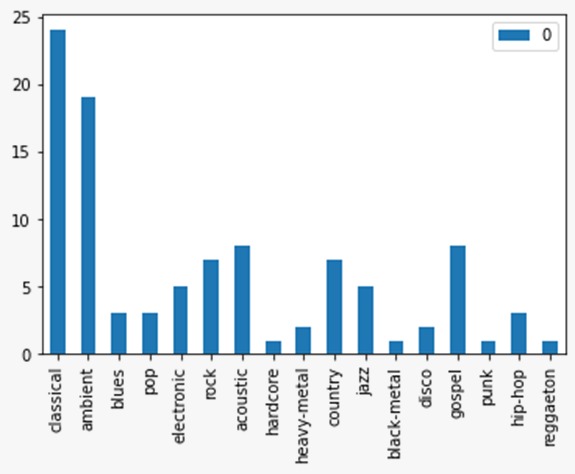

To achieve this, the first thing they did was to feed a model with data: "The dataset I generated is a multimodal dataset that had each song -represented as a spectrogram- associated with its album cover. The model was fed with a number of pairs (song, cover) of approximately 20,000 data," explains María José Apolo.

What does it mean that the songs have been processed as spectrograms? A spectrogram is a visual representation of sound, showing the variations in intensity and frequency over the duration of a song. It is a much more advanced and complex version of what we see, for example, when we use the computer microphone and shows us -in a horizontal bar- the volume of our voice (intensity) and is activated only when we speak (frequency).

Spectrograms of similar songs will tend to share similar measurable characteristics and an AI model can analyze them to identify those that are similar or those that are distant in the musical spectrum.

After this, the researchers began to enter queries into the model: when asked about a specific song, which the system has identified through a spectrogram, the model showed what it considered to be the 100 covers most similar or closest to the song queried. María José comments that, for the most part, the covers that the system returned were of the same genre as the song queried or of similar genres.

CREATING COVERS WITH IA

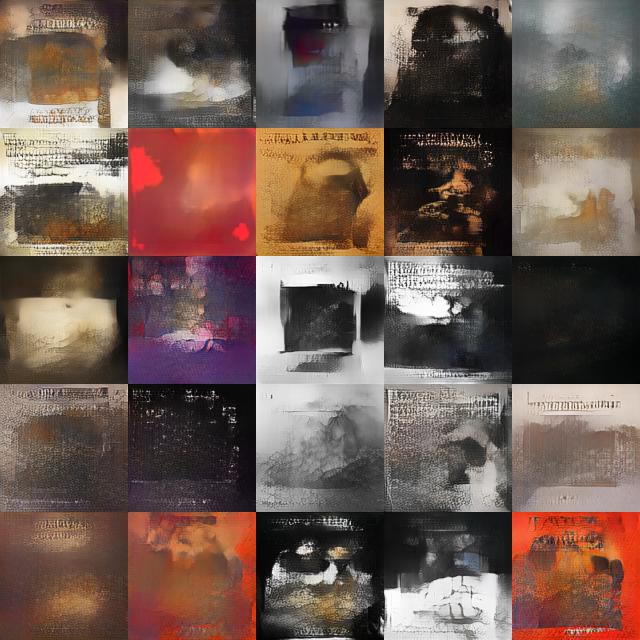

In a second phase, the researcher began to use those 100 covers that the system delivered for a queried song to train a generative image model, something like the Dall-e or Stable Diffusion systems, which extracted the common characteristics of those covers and began to create new ones.

This, with the objective that, when entering a song of a certain genre, the generative system would create a completely new image that effectively corresponded to the song. This was done with songs of 10 different genres, and it was seen that the generative model, when generating new cover images, obtained an accuracy of 20.89 on a scale of 0 to 250, where what is closer to zero is more accurate or similar.

"I chose this research topic because I find it very interesting how, from the field of deep learning, it is possible to model and give an interpretation to an abstract and subjective question, such as the transfer of style from a musical work to an image, where a translation of multimodal attributes that are conditioned by the individual's own concepts and their biological and social background must be made," explains María José Apolo.

The researcher's future hope is to generate a system that allows emerging artists who do not have sufficient resources to enter their original song on a platform and obtain an original album cover that matches the author's song.