IMFD researcher to present at major logic and automata conference

The 2024 edition of Highlights will be held from September 16-20 at the University of Bordeaux (France). Pablo Barceló's talk will cover three case studies that highlight the key role of logic in improving the understanding of modern machine learning architectures.

The Laboratoire Bordelais de Recherche en Informatique(LaBRI), which operates at the University of Bordeaux (France) and brings together more than 100 researchers, will this year host Highlights, one of the world's most important conferences in the fields of model theory, automata theory, databases and games for logic and verification. The 2024 version, to be held between September 16 and 20, will feature four invited speakers, including Pablo Barceló, director of the Institute of Mathematical and Computational Engineering (IMC UC).

"Highlights brings together the best papers from the most relevant conferences in different areas. It is an informal conference where there are no proceedings, which are the collections of papers that are published in the context of an academic meeting. Instead, people submit the best papers that have already been published and, therefore, it is the big meeting of those working in areas such as logic for computer science and automata," says Barceló, PhD in computer science and researcher at the National Center for Artificial Intelligence(CENIA) and the Millennium Institute Foundational Research on Data (IMFD).

The academic adds that, with the aim of offering a broad look at the latest advances in the different areas covered by the conference, each year the organizers include speakers who are renowned researchers in their respective fields. During this edition, alongside Barceló, Albert Atserias(Polytechnic University of Catalonia), Mikołaj Bojańczyk(University of Warsaw, Poland) and Laure Daviaud(University of East Anglia, UK) will be presenting. "In addition to people who present papers in just 10 minutes, there are the keynote presenters who give longer one-hour talks. In my case, this is the first time I've been invited," he says.

Pablo Barceló, director of IMC.

The talk will be entitled "The Role of Logic in Advancing Machine Learning: Three Case Studies" and will cover case studies that highlight the essential role of logic in improving the understanding of modern machine learning architectures. The first two papers are "Logical Languages Accepted by Transformer Encoders with Hard Attention" and "The Logical Expressiveness of Graph Neural Networks", in which researchers Alexander Kozachinskiy (postdoc IMC UC/ CENIA/ IMFD) and Juan Reutter (academic IMC/ Department of Computer Science UC and director of IMFD) also participate, respectively. The third one is called "A Uniform Language to Explain Decision Trees" and has as one of its co-authors Marcelo Arenas (IMC / Department of Computer Science UC and IMFD researcher).

"What I want to show is that the logical aspects of computer science can be used to understand the capabilities of current artificial intelligence models. We work in three areas; one concerns graph neural networks, another is the expressive power of transformers, which are the architectures behind language models like ChatGPT. Finally, we address the interpretability of the models. That is, how I explain the decisions they make, showing in each of them that logic can be an important tool to understand what can be done and what the computational cost is", says Barceló.

The Transformers Revolution

Transformer models originated from a paper published by Google researchers in 2017 and titled "Attention Is All You Need", quickly becoming one of the most influential developments in the field of machine learning. As the IMC director points out, its architecture is fundamental in OpenAI's cutting-edge language models such as ChatGPT, as well as being key in the creation of software such as DeepMind's AlphaStar.

"Transformers are language models that recognize sequences of symbols, of words. So, from a mathematical point of view, it is interesting to understand which languages can be recognized by transformers and which cannot. For that, what we do is formalize a computational model behind the transformers and study that model based on which languages it can accept, which generates links with the logical theory of languages and the theory of automata, which are also mathematical objects that process sequences," says Barceló.

Transformers have become essential for applications such as ChatGPT.

This work is essential for the current development of transformers, whose application can be seen in chatbots, virtual assistants and other interactive artificial intelligence systems where a dynamic conversation with users is vital. "Today we are seeing that they can generate and enhance texts. But with the refinement of these models they are also going to be engaged to deal with multimodal data, which is not just text, but also encompasses images or sounds. These transformers will be able to extract information from all these sources as if it corresponded to a single type of data," explains the researcher.

The potential of networks

A graph is a mathematical data structure consisting of a set of vertices-called nodes-and a set of edges connecting these vertices. In the 1980s, researchers began to explore ways to apply neural networks to data organized as graphs, although it was not until the early 2010s that graph neural networks began to become more widely known, a product of the development of new learning architectures and algorithms.

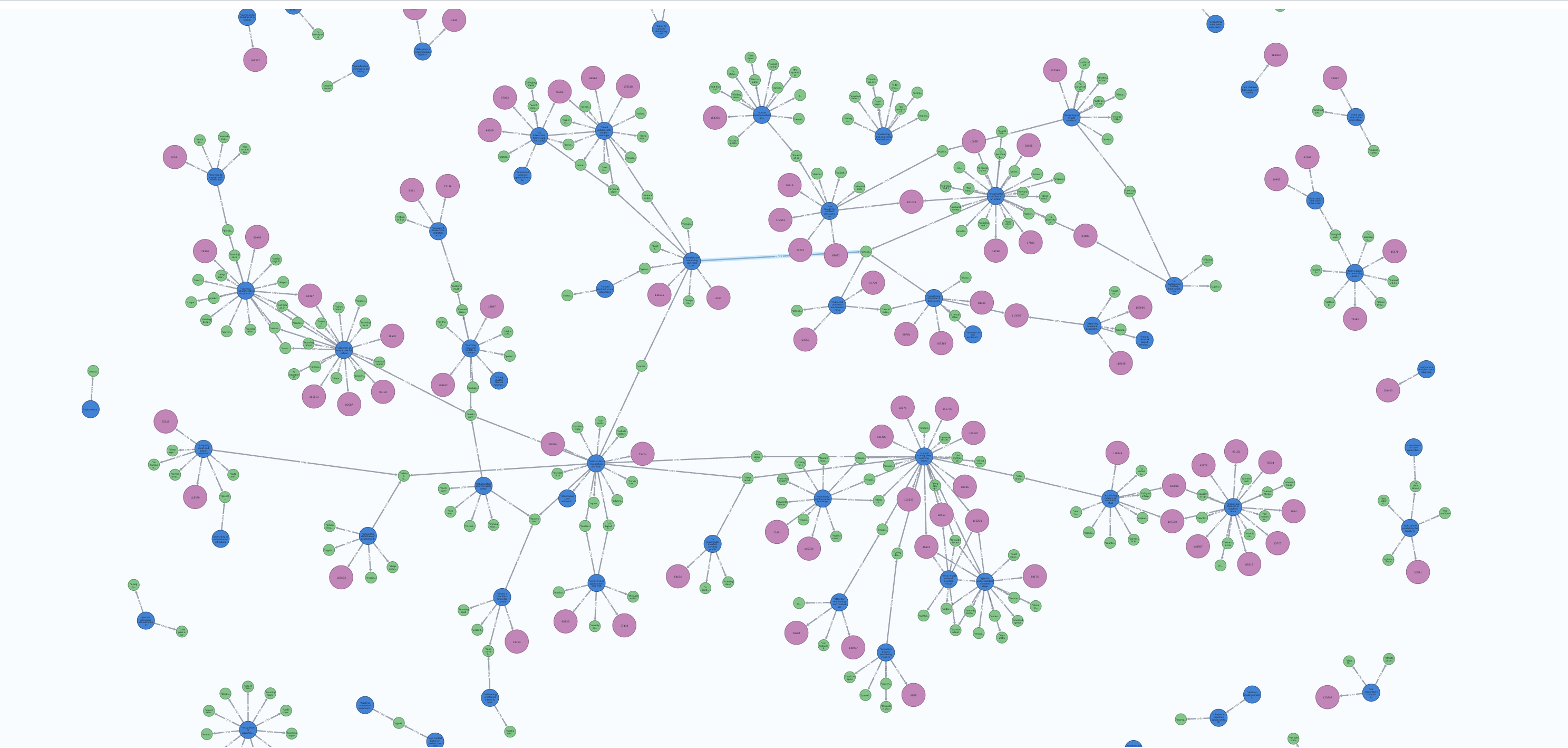

"These neural networks are models that process information that is structured on the basis of graphs. We analyze which are the properties of graphs that can be recognized by a neural network of this type and for that we use logical methods. We show that any expression that can be expressed with a certain logic can be expressed in a graph neural network and vice versa. If there is a logical expression that can be recognized by a graph neural network, then it is expressible in this logic. It's like a one-to-one relationship between the two," explains Barceló.

Graph neural networks have undergone significant advances in recent years.

Today, these networks have applications in a variety of fields. "For example, graph neural networks are used for fraud detection in banks. If you have a transaction graph, with a neural network you can start to detect areas where there are operations that look strange. They are also used to optimize transportation networks. In the development of new drugs they are also very useful, because they can show that drugs that tend to attack certain diseases more effectively stick to a certain molecular structure. With that information, you can go on to generate another new drug," says the IMC director.

Explainable artificial intelligence

The third study to be presented by Barceló in France addresses the search for explainable artificial intelligence and demonstrates how first-order logic can be used to design languages that state, evaluate and compute explanations for decisions made by machine learning models.

"In the end, the models work as black boxes, since we understand very little about how they make decisions. That is why there is an increasingly important tendency to try to design models that are more explainable, that in some way provide reasons why they adopted certain decisions", says the academic.

An example is when a bank uses one of these models to grant or reject a loan to a client: "It is important to know on what criteria it based its decision and not that the model simply provides an answer. In the end, these criteria are mathematical notions that can also be formalized through logic". This is because today we want the "explanations that are obtained to provide mathematical guarantees, and that they are the best or the most concise or efficient".

Source: IMC UC