Noticias

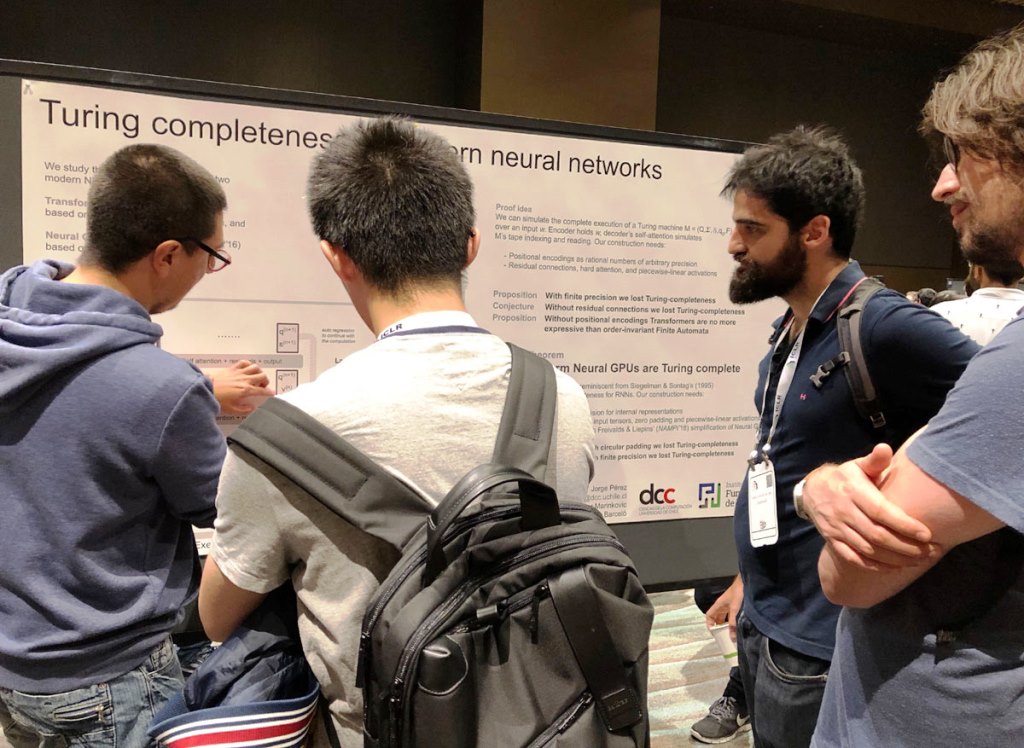

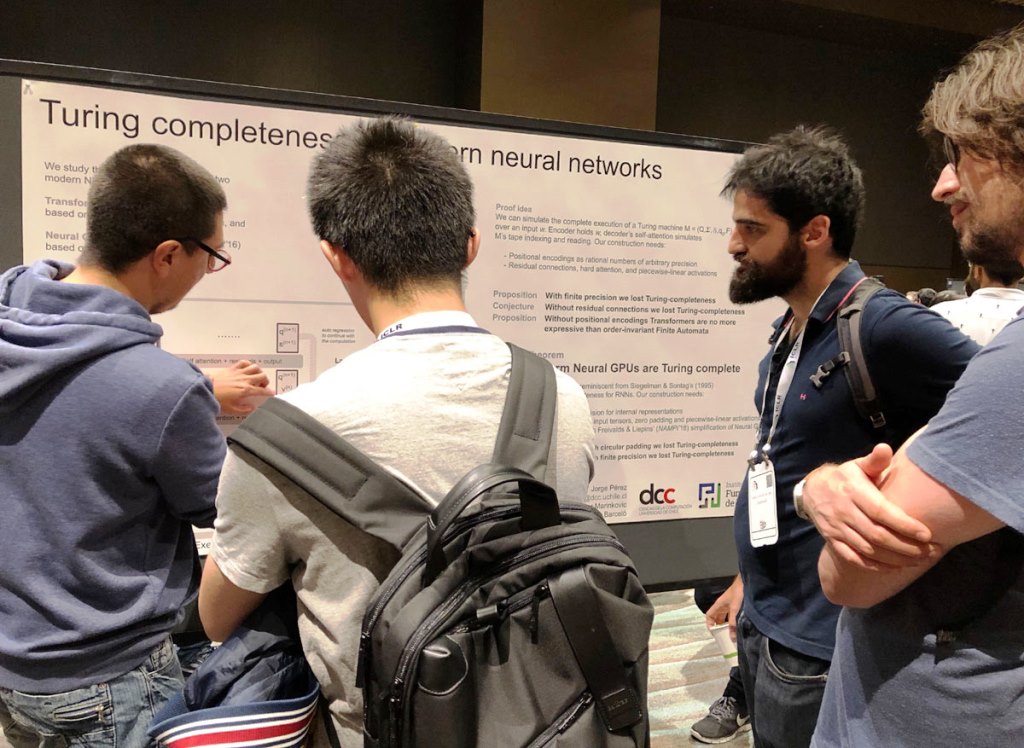

“On the Turing Completeness of Modern Neural Network Architectures” is the title of the research that Jorge Pérez, academic from the Department of Computer Sciences (DCC) of the University of Chile and researcher at the IMFD, presented at the International Conference on Learning Representation (ICLR 2019), which took place in the city of New Orleans, United States. This is the most important international conference in the area of Deep Learning.

This study was conducted by Jorge Pérez, Pablo Barceló –also an academic at DCC and a researcher at the Millennium Institute Fundamentals of Data- and the undergraduate student in Computer Science, Javier Marinkovic.

Jorge Pérez explicó que las redes neuronales profundas se usan hoy para tomar decisiones en muchos rubros como clasificación de imágenes y texto, traducción automática y recomendaciones de contenido. Sin embargo, afirmó que el poder computacional de estas redes no es cien por ciento entendido desde un punto de vista teórico. “En nuestro paper analizamos el poder computacional de una de las arquitecturas de red neuronal profunda más populares en la actualidad, llamada Transformer. Esta arquitectura es la que usa Google, por ejemplo, para su sistema de traducción automática, pero ha sido usada para muchas otras tareas, incluso generar música” (una pieza de música creada por esta red la puedes encontrar acá:

Jorge Pérez explained that deep neural networks are used today to make decisions in many areas, such as image and text classification, automatic translation and content recommendations. However, he claimed that the computational power of these networks is not one hundred percent understood from a theoretical point of view. “In our paper we analyzed the computational power of one of the most popular deep neural network architectures today, called Transformer. This architecture is what Google uses, for example, for its automatic translation system, but it has been used for many other tasks, including generating music “(Here you can find a a piece of music created by this network: https://bit.ly/2HlOoV2).

En esta investigación, los académicos demostraron que el poder computacional del Transformer es tan alto como es posible para una máquina automática, noción que se conoce como Turing Completeness. “Esto quiere decir que la arquitectura usada por Google para traducir o generar música tiene el poder de implementar cualquier algoritmo posible. Hasta antes de nuestro resultado no se sabía con claridad cuál era el límite de poder de esta red neuronal. El artículo también muestra qué partes de la arquitectura de esta red neuronal la hacen tan poderosa y que si quitáramos ciertas partes efectivamente perdería poder”, concluyó Pérez.

In this research, the academics revealed that the computational power of the Transformer is as high as possible for an automatic machine, a notion known as Turing Completeness. “This means that the architecture used by Google to translate or generate music has the power to implement any possible algorithm. Until before our result, it was not clear what the power limit of this neural network was. The article also shows which parts of the architecture of this neural network make it so powerful and that, if we remove certain parts, it would effectively lose power”, Pérez concluded.

You can find a summary of the paper at https://bit.ly/30q2E6q

Comunicaciones del DCC.